Goal and Background

The goal of this lab was to introduce photogrammetric tasks on aerial photographs and satellite images. It specifically focuses on the mathematics for calculating photographic scales, measurement of areas and perimeters of feature, and calculating relief displacement. It also focuses on stereoscopy and orthorectification. Stereoscopy is used for 3-Dimensional viewing of an image and uses the science of depth perception. Orthorectification removes positional and elevation errors from an aerial photograph or satellite image.

Photographs and Images used in this lab are:

- National Agriculture Imagery Program (NAIP)

- Digital Elevation Model (DEM)

- Digital Surface Model (DSM)

- Images from Erdas Imagine

Methods

Part 1: Scales, measurements, and relief displacement

In this part of the lab, we needed to measure the scale of an image by using a ruler, measure the area of a feature in an aerial photograph, and calculating relief displacement from an object height. We needed to calculate the ground distance between two points within in an image. The equation used was Scale = photo distance/ ground distance. This looked like, 2.7 inches/ 8822.7 feet which ended up with a scale of 1:39,211. Next we needed to measure the scale of an image. For calculating scale we used the equation, S = f / H-h. Where S= scale, F= focal lens length, H= altitude above sea level, and h= elevation of terrain. We were given H= 20,000 ft, F= 152 mm, and h= 796 ft. After calculating that the scale of the image ended up being 1: 39,211. Next we needed measure the area of a lagoon. In order to do this we used the measure polygon tool in Erdas Imagine and digitize around the lagoon. In Figure 1 below is the lagoon that was digitized.

|

| Figure 1: Digitized lagoon to measure area. |

The last section of part 1 had us calculate relief displacement from object height. Using a JPEG image of a smoke stack on the UWEC campus we used the equation d= (h)x(r)/ H. d= relief displacement, h= height of object (real world), r= radial distance of top of displayed object from principal point (photo), and H= height of camera above local datum. This turned out to look like this, 1604.5 inches x 10.5 inches / 3980 feet which turned out to be 0.352 inches. This means the smoke stack needed to be moved 0.352 inches in order to be corrected.

Part 2: Stereoscopy

The aim for this part of the lab is to generate 3D images using an elevation model. First we needed to look at two images of the Eau Claire area that showed relief displacement. Below in Figure 2 is an example of relief displacement on the UWEC campus.

|

| Figure 2: Relief displace is seen on the image on the left and corrected on the right. |

Relief displacement is visible is the image on the left. We can see the sides of the buildings and they're corrected in the image on the right when we can only see the tops of the buildings. We looked at these images to get a feel for how relief displacement can alter an image. Next we needed a pair of polarized glasses in order to finish this part of the lab in order to analyze the analglyph images we generated. For this section we needed to use the Terrain- Anaglyph tool. This creates a 3D image and gives us a new way to look at our image. Once we put on our 3D glasses we can see a difference in the images. Below in Figure 3 is an example of an anaglyph image.

|

| Figure 3: Anaglyph image is on the left and become 3D with polarized glasses. The original image is on the right. |

Part 3: Orthorectification

This was the biggest section of the lab and introduced us to Erdas Imagine Lecia Photogrammetric Suite (LPS). This is used in digital photogrammetry for triangulation, orthorectificattion, extraction of digital surface and elevation models, and much more. We used LPS in order to orthorectifiy images and create a planimetrically true orthoimage.

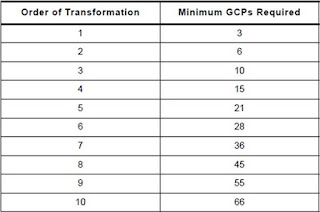

In the first section we used an already orthorectified image as a source for ground control measurements. We then created a new project in LPS and began creating ground control points (GCPs). In Figure 4 below, we can see the already orthorectified/ reference image on the left and the image we're creating GCPs for on the right.

|

| Figure 4: Creating GCPs for the image on the right by using a reference image. |

The coordinates of where we needed to create the 12 GCPs were given to us in the directions. If our points were not within 10 meters of the given coordinates, then we needed to redo the point. Fortunately, most of my GCPs were correct the first time. In Figure 4 we can see that nine GCPs have been created and we needed a total of 12. After creating all 12, we then needed to set the vertical reference system and collect elevation information for all of the horizontal reference GCPs we created in the first section of part 3. Now that this is done we moved on to the second image in the block.

Now we need to define the Sensor Model in a similar way to what we just did. We used the GCPs we just collected to create more GCPs in a new image that overlaps the one were just working on. We only created GCPs for certain point that were in both images. There were some points that only occurred in one image, therefore another GCP didn't need to be created for these. Once this was finished we ended up with what it pictured below in Figure 5.

|

| Figure 5: The result of creating GCPs in two overlapping images. |

Next, we needed to use tie point collection, which measure the image coordinate positions of GCPs appearing on the overlapping area of the two images pictured above in Figure 5. By running this process beginning to tie the images together. Figure 6 below shows the screen after the automatic tie had finished running.

|

| Figure 6: Auto tie completion. |

Once the process ran, we needed to ensure that the points were tied in the correct places. All of the points were in the correct areas so we moved onto triangulating the image. After this we were almost done. We just needed to run the Ortho Resampling Process. This ultimately created the final image that we had been working towards this entire part of the lab. Below in Figure 7 is the final product of the overlapped images morphed into one. In Figure 8 is the same image just zoomed in to show how well the GCPs worked in order to create a smooth final product.

Results

|

| Figure 7: The final result of Orthorectification. |

|

| Figure 8: Zoomed into the final image to show the minimal difference between the two images combined. |

In the end, the two overlapped images turned out incredibly well. All the work that went in behind this process created a smooth and accurate image. This is an incredibly useful process that is used in many images. It can be a long process if many GCPs are needed, but ultimately worth it.

This whole lab was incredibly useful in learning photogrammetric tasks. It was intense and took quite a few hours to complete it, but ultimately worth it. I learned many new way to alter images in order to create better results.

Data Sources

National Agriculture Imagery Program (NAIP) images are from

United States Department of

Agriculture, 2005.

Digital Elevation Model (DEM) for Eau Claire, WI is from

United States Department of

Agriculture

Natural Resources Conservation Service, 2010.

Lidar-derived surface model (DSM) for sections of Eau Claire and Chippewa are from

Eau

Claire

County and Chippewa County governments respectively.

Spot satellite images are from

Erdas Imagine, 2009.

Digital elevation model (DEM) for Palm Spring, CA is from

Erdas Imagine, 2009.

National Aerial Photography Program (NAPP) 2 meter images are from

Erdas Imagine, 2009.